Does the rise of AI signal a new ‘onslaught’ of phishing attacks?

In its most recent in-depth report, the Anti-Phishing Working Group, which has produced reports of phishing activities and trends for nearly 20 years, said there were over 1.2 million recorded attacks in the third quarter of 2022. This represented “a new record and the worst quarter for phishing that APWG has ever observed.”

This alarming trend seems likely to be further fuelled by the rise of AI-generated phishing, which according to analysis published in Forbes, is set to help cyber criminals deliver an “onslaught” of attacks. According to one industry expert quoted by CNET, “The barrier to entry is getting lower and lower and lower to be hacked and to be phished.”

For example, AI technologies give hackers a powerful tool for increasing email content’s sophistication, variety and accuracy. Until now, grammatical and style errors have often given recipients a clue that emails might not be genuine, but the arrival of ChatGPT and other powerful tools has helped cybercriminals raise their game. On top of that, because bad actors no longer have to put the same levels of time and effort into producing email content, they can instead focus on increasing their output, putting further pressure on users and cybersecurity technologies.

The use of AI to industrialize phishing comes as experts also warn about its use as a way to quickly and effectively develop malware. In many ways, this is nothing new – bad actors have been developing new ways to create new and evasive malware for years. Whether it’s applying techniques such as packing, encryption, and polymorphism to anti-analysis processes such as virtual machine and sandbox detection, malware authors are constantly finding new ways to beat AVs, sandbox systems and security researchers to deliver malicious payloads.

Proactive protection

The problem is that the development of ChatGPT and a myriad of other AI tools means that we are also not very far away from an explosion in AI-produced Malware. And while ChatGPT is programmed to deny requests to create malware, recent media headlines suggest it’s already being used to develop code for malicious purposes.

To guard against the growing risks this situation presents, organizations need to remove threats from the equation before users are put in the position of having to make an unwise choice. Whilst most files people download/upload are harmless, it only takes one harmful file delivered via a convincing-looking email to slip through the net to expose an IT infrastructure to a potentially serious and costly mistake.

For instance, hyperlinks are often used in phishing attacks, with cybercriminals creating links that look legitimate and trustworthy on the surface, but once clicked, they take a user to a different destination, and a chain of malicious events is activated.

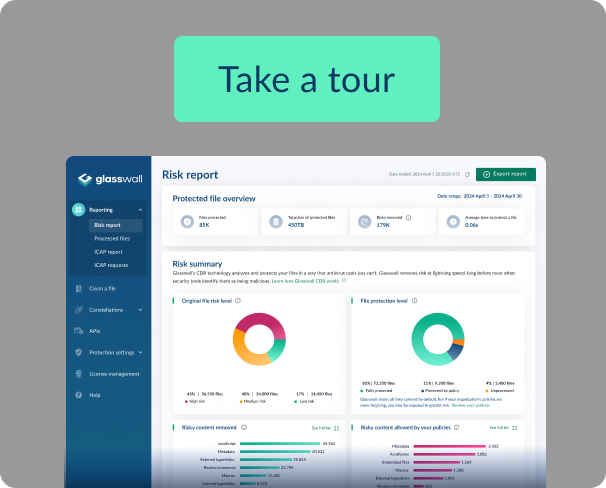

So how can organizations stay ahead of the risks? In contrast to reactive AV and sandboxing solutions, Glasswall’s CDR engine protects its users from file-based zero-day malware by an average of 18 days before conventional AVs and detection systems. Instead of looking for malicious content, our advanced CDR (Content Disarm and Reconstruction) process treats all files as untrusted, validating, rebuilding and cleaning each one against their manufacturer’s ‘known-good’ specification.

Only safe, clean, and fully functioning files enter and leave an organization, allowing users to access files with full confidence. In a rapidly approaching future where AI is a go-to tool for cybercriminals and nation-state attackers, proactive protection against file-based threats has become more crucial than ever. To read more about how Glasswall protects against file-based threats, click here.