What Code Blue 2025 tells us about the growing risk hidden in everyday file handling

Code Blue is one of the most highly regarded cybersecurity conventions in the world, with a focus on targeted, high-level content that also fosters a strong community feel. Hosted annually in Tokyo, it does a great job of combining the qualities of B-Sides conventions in its smaller venue and format, whilst also offering content and research at a level seen at much larger conventions.

One of its major benefits is the quality of the talks, which is exceptionally high across all subject genres, with technical talks doing deep dives into the affected technologies and methodologies of exploitation.

At last November's event, the opening keynote was delivered by Daniel Brandy, who presented DARPA’s work on Cyber Reasoning Systems (CRS). He focused on how AI is already being used on both sides of the security equation, not just to invent new attack techniques but to accelerate and scale existing methods. For instance, rather than producing fundamentally new malware, AI is enabling threat actors to generate large volumes of small file variants, each differing just enough to evade traditional detection.

This, he argued, increases uncertainty at the point of inspection, with files becoming harder to classify with confidence despite relying on familiar techniques. The challenge is one of speed and variation, not radical innovation.

From a defensive perspective, AI is also being applied to file inspection and analysis, but current models still struggle to reason reliably about failure conditions and unexpected outcomes.

When ‘low-risk’ files and devices become high-impact attack surfaces

One of the most striking pieces of research focused on Amazon Kindle devices, specifically challenging the assumption that certain platforms and file types represent minimal risk.

The research showed how modern devices routinely process downloaded files automatically, using background scanning, parsing, and rendering services that operate with little user visibility. In the case of Kindle, these services monitor for new content, determine the file type, extract metadata, and make the content available to the user, all without requiring any user interaction.

Crucially, if these background services crash, they are designed to restart automatically and repeatedly reprocess the same file. The research showed how this behaviour creates what can be described as a persistent execution opportunity, in which a single file may be handled multiple times without any user involvement. Similar automated reprocessing patterns also exist in enterprise environments, where files are routinely ingested, scanned, and parsed by background services.

The research went on to demonstrate that a successful compromise could potentially be triggered through ordinary content delivery mechanisms, including files that appear legitimate to both users and platforms. The broader implication is that devices that, from a security perspective, are generally considered peripheral or low risk can still provide attackers with a foothold.

Complexity, opacity and the spaces attackers exploit

Beyond the security risks associated with individual devices, a recurring theme was the way modern platforms are built from layers of components that are undocumented, opaque or poorly understood outside their original design context.

For example, research into low-level mobile subsystems showed how attackers are increasingly targeting digital signal processors, firmware and kernel-adjacent services that reside outside the reach of traditional security tools. In these environments, vulnerabilities often emerge not from a single catastrophic flaw, but from assumptions made at the boundaries between components, where responsibility and visibility can break down.

In practice, these layers sit below EDR, outside SIEM visibility, and beyond most security teams’ threat models. From the risk perspective, exploitation frequently occurs in these transitional spaces, where complexity itself creates reliable blind spots. Moreover, as these systems become even more modular and abstracted, these gaps become harder to address and, as a result, remain highly attractive to attackers.

The Glasswall perspective

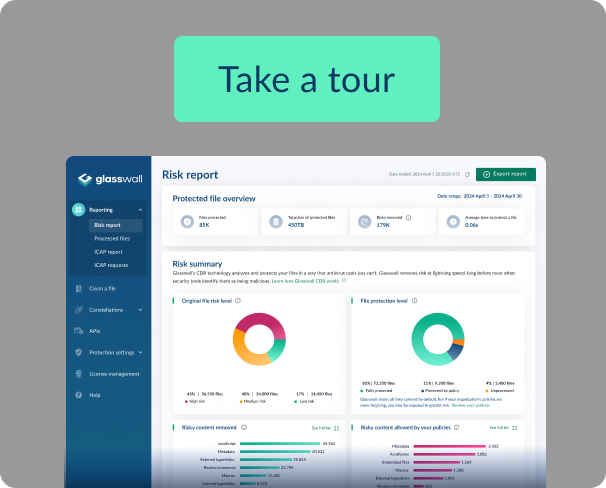

Code Blue is a highly regarded, research-led event that consistently presents security findings ahead of broader industry awareness. From a Glasswall perspective, its themes and content once again reinforced how difficult it has become for organisations to maintain visibility and control over files as they move across modern environments.

In this context, the risk lies less in overtly malicious behaviour and more in the implicit trust placed in file formats and the automated pipelines that handle them. For security leaders, this underlines the importance of understanding not just where files are sent, but how they are processed, particularly in environments where those processes happen automatically and at scale. Ultimately, the real risk is no longer whether a file is ‘malicious,’ but whether its processing can be made safe under infinite variation.

.png)